Joint All-Domain Operations: Redesigning the Ecosystems of Engagement

Presented by Parsons

During the last PSC Leadership forum, Parsons provided. a broad overview thought piece on Multi-Domain Command and Control (MDC2) and Multi-Domain Operations (MDO). In the 18 months following, there have not been significant tactile changes to allow modified Warfighter practices; however, recognition and acceptance for the need to change from legacy ways of conducting development, procurement, and execution of operations in this highly dynamic environment has spread across the Department of Defense (DoD) and within the militaries of our allies. This is especially true regarding how software development, procurement, delivery, and execution connects all actions across the battlespace. Specifically, all operations from the Seabed to Space require coordination, integration, and synchronization to execute Joint All-Domain Operations (JADO). JADO reaches across the spectrum of operations from Humanitarian efforts and Gray Zone actions to Peer-on-Peer conflict, however, the concepts and capabilities are not just for military applications but could apply to finance, transportation, energy, or other segments.

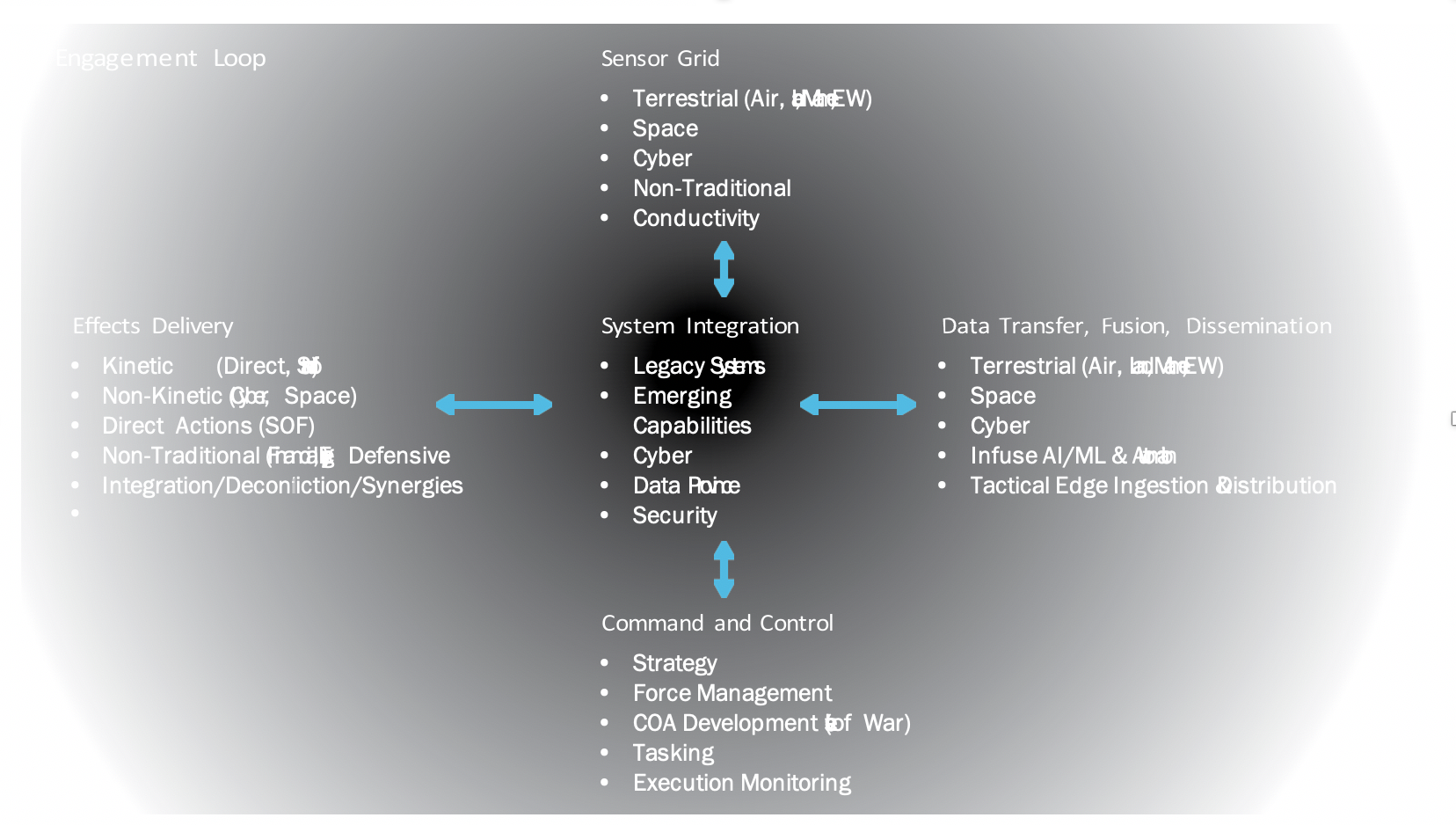

Figure 1 does not directly call out software development and data flow, but they are the 2nd level enabler for success in all these areas since this is the path toward creating a C2 neural network appropriate for Joint and Coalition operations. It’s the only way to ensure that commanders across the spectrum of conflict have the right information and ability to access the variety of options available to the them, both kinetic and non-kinetic.

It has become apparent that the only way to execute JADO effectively and efficiently is to ensure a robust, flexible, and scalable Command and Control (C2) system. The new Joint All- Domain Command and Control (JADC2) concept must radically depart from the legacy C2 construct of today, which is based around hardware (e.g. planes, ships, vehicles, radios), and not a seamlessly integrated and synchronized System-of-Systems (SoS) akin to the body’s neural network. This neural network vision is widely accepted, but as acceptance grows, so does the realization of the complexity. At Parsons, we acknowledge the intricacy and depth of this problem set and have emerged as a thought leader in this domain. We began by breaking the highly integrated single system-of-systems, with unfathomable connection points, into five smaller ecosystems as identified in Figure 1. In this construct, it is crucial to maintain the overarching vision of the interconnectedness between all five areas while also maintaining the connection points found within each individual ecosystem.

As Dr. Will Roper mentions, integrated, synchronized data capability as a paramount, in terms of moving far left in the decision cycle and leads to the ability to make rapid decisions and potentially avoid conflicts based on actionable intelligence available across domains. Due to the length of this piece we’ve provided five areas for consideration and thought.

New Developmental Processes

Containerization has opened a whole new approach to software development, deployment, and maintenance, which now allows for more scalable, flexible, and robust systems. Specifically, it allows for the development of cloud-native microservices architecture and rapid deployment as part of any hosted or on-premise cloud-based SoS, on physical network hardware, or in a hybrid configuration. The features and driving factors for using a microservices architecture are analogous to a SoS which shares a common approach and the need to break capabilities or applications out into separate, non- monolithic pieces.

The microservices architecture is designed and implemented to support flexible distribution, evolving software development, operational and managerial independence, and emergent behavior. These attributes enable the rapid integration of disparate capabilities that can be tailored to the situation quickly and efficiently.

DevSecOps

The General Services Administration (GSA) website describes DevSecOps as, “DevSecOps improves the lead time and frequency of delivery outcomes through enhanced engineering practices; promoting a more cohesive collaboration between

Development, Security, and Operations teams as they work towards continuous integration and delivery (https://tech.gsa.gov/guides/ understanding_differences_agile_devsecops/). The acceptance and promotion of both agile and DevSecOps across the DoD has been a sea-change event for the development, modernization, and implementation of software capabilities. One of the leading programs is the Air Force’s PlatformONE.

The development of the PlatformONE DevSecOps pipeline has been foundational in the creation of the DevSecOps culture that defines the rapid development and delivery of secure software. It includes a container hardening factory that supplies secure containers to the downstream software factories. It also contains the PlatformONE factory, which supplies a push-button pipeline enabling a Continuous Authority to Operate (C-ATO) process for software product teams (and factories). The platform’s deployment process accepts accredited mission applications and automates the process of delivering capabilities to the end user at multiple security levels. These are concepts and processes that must be implemented industry-wide.

Along with containerization, container hardening should be implemented in a Continuous Integration/Continuous Deployment (CI/CD) pipeline (e.g. Gitlab CI runner) and be source controlled and standardized across all container build processes. CI/CD pipelines can provide automated notifications of everything from security scan results to build failures significantly reducing development time as issues are identified and resolved early and often. These are more than just general approaches – Parsons is proving these concepts working hand in hand with government organizations such as PM Mission Command (PM MC), the Information and Intelligence Warfare Directorate (I2WD) the Air Force Research Lab (AFRL), and others to reduce to the time it takes to deliver meaningful software updates to the field quickly enough to be operationally relevant.

Containers have enabled us to quickly accept software solutions from across industry and bring them together with both our own capabilities as well as government solutions and deploy them as seamlessly integrated solutions.

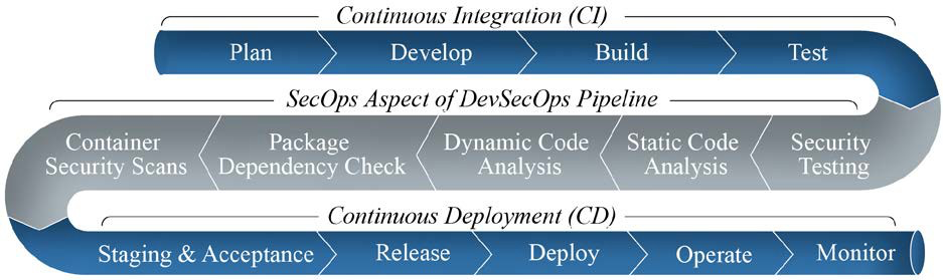

Figure 2 depicts the DevSecOps lifecycle phases with proposed technologies and associated processes. The tools and strategies described above apply to all phases of the lifecycle below. Our approach emphasizes the SecOps portion of the CI/CD flow. The platform will be deployed in hardened containers on a K8s cluster to enable a push-button, secure factory pipeline.

The platform gives developers, vendors, and users the opportunity to monitor and influence the integration of novel solutions as they are introduced. This includes build requirements in the core architecture, where new containers are first defined using technologies such as Helm Charts, which not only help to on-board the new solution into the containerized cluster properly, but force the documentation of the container and its requirements, which are necessary for Risk Management Framework (RMF) documentation and accreditation. Additionally, the security phase encompasses several stages to include static code analysis, dynamic code analysis, dependency checking and container security verification.

Data Rights

Data Rights continue to be a point of contention, but if we are to truly provide the nation with an effective, scalable, and flexible C2 construct, all technology exclusively funded and developed under government efforts should be transferrable with unlimited data rights. For technology that has been developed previously but augmented under government funding, government purpose rights would be appropriate. Technology that has been created and funded privately should carry with its limited rights but may be subject to negotiation. Finally, for COTS products, licenses will be transferrable to the government (if not already purchased by or licensed to the government).

Sensor Integration and Data Access

Integration of sensor data from all domains requires a centralized, scalable solution capable of ingesting, normalizing, analyzing and persisting real-time sensor feeds. This system needs to be aligned with a microservices architecture that allows for the dynamic ingestion of new sensor protocols as more advanced sensors are deployed to the field. Most importantly, once in the system, the DoD needs to truly multi-modal data analytics and fusion algorithms capable of making sense of the massive amount of sensor data constantly flowing through our networks. Currently, the amount of raw multi-domain data presented to users can quickly trigger cognitive overload in analysts, causing them to miss critical information hidden with the torrent of repetitive or unrelated data scrolling across their screens.

Parsons has been working with the government for years in pursuit of this, developing modular, government owned frameworks capable of quickly ingesting new types of sensor data as they are introduced into the field. Providing the government tools to bring together and analyze disparate types of data is the foundation for an effective JADC2 solution.

Both the raw data and the products created by analytics has become an important commodity, potentially the most important commodity available, and there needs to be an understanding that data will be freely shared between the government and industry partners, and vice versa. Tools which sequester data or limit its unincumbered transferability need to be approached very cautiously, and with the understanding that the lack of transparency could have significant impacts on future capability, cost, and data reconciliation. Data analytics technologies and techniques are widely used to enable organizations to make more-informed decisions and are critical for successful implementation of Artificial Intelligence and Machine Learning (AI/ML). The data – AI/ML relationship is the most significant capability needed to allow JADO decision making to operate at speeds necessary to maintain/gain advantage against adversaries. This is an area in which Parsons has extensive experience, however, for this piece we’ve focused on the other foundational aspects which allow for solid data, processes, and capabilities that ultimately facilitate the data – AI/ML relationship.

Integration with Existing Systems

Finally, it is critical to address the integration of existing systems into a modern agile construct. The ability to upgrade highly complex systems built with legacy capabilities is a significant undertaking, especially when linked with the need to maintain functionality through the implementation of or integration into

a new system. Gaps between currently fielded capabilities and future systems to meet new enemy Tactics, Techniques, and Procedures (TTPs) is an ever-evolving dynamic, especially as we encounter threats from near-peer aggressors. New sensors have protocols that can’t be ingested by intel systems and intel can’t talk to new mission planning or firing platforms, etc.

Parsons’ capabilities reside at the intersection between all of these systems and allow them to communicate and normalize messages into standards that systems upstream can understand, which enables the government to limit costly changes to programs of record as new technology is deployed to the field. As an example, over the last two years, we have been modernizing our C2Core Air capability which is based on our Master Air Attack Planning Tool Kit (MAAPTK) application used in every Air Operations Center (AOC).

The application has a diverse and distributed customer base that allows us to realize efficiencies and share sustainment and modernization costs. Through each of these efforts, we developed application enhancements with the larger community of users in mind, while ensuring all users could take advantage of the upgraded capabilities. Parsons modernized the code by migrating legacy capabilities into a web-based modular microservices architecture while soliciting Warfighter feedback through interim releases. We developed a hybrid approach for modernizing the software that enables the legacy desktop application and web-based UIs to share and act on the same data allowing for incremental delivery of web enhancements without losing functionality in the mission-proven desktop application. This approach reduces operational risk and costs while delivering capabilities to the Warfighter faster than the “green field” approach to modernization and modularization. Our modernization effort is yielding a deliverable with low technical debt, as shown by the recent positive Silverthread analysis of the current source code.

Parsons selected Silverthread, an independent third-party software assessment firm, to provide cumulative technical debt analysis of developed code, specifically addressing architecture degradation and code implementation deficiencies of our new microservices architecture in comparison to the legacy builds. After completing the analysis, Silverthread reported the current code was in the top 10% when compared to peer system benchmarks and had substantially improved compared to the original legacy codebase. Their report stated: 1) the current codebase contained no critical cores, indicating a significant improvement from a code-level view; and 2) had no areas that needed to be addressed immediately to mitigate or avoid the proliferation of complex design problems. Their report also indicated the new codebase had much lower development costs in addition to higher code quality than the previous legacy codebase and a high majority of competitor capabilities.

This use case provided us with a process and greater understanding on how to deliver a more scalable, flexible, and sustainable software capability without losing functionality of the legacy systems during transition. This knowledge, linked with our DevSecOps environment and agile process, provides an outstanding model and proven path for moving legacy systems into a new, more interconnected world.

Conclusion

Given the vast range of challenges the DoD is forced to face in combating adversaries in JADO, it’s difficult for any single vendor to come in and solve every challenge alone. Therefore, the United States Government, Allies, and Industry partners must continue to work together to push forward the vision of JADO and JADC2. Acquisition processes must continue to be refined to meet this seismic shift in technological capabilities and must also allow for close partnerships and transparency between government and industry as opposed to the legacy client-supplier mentality. Parsons stands ready as a teammate across government and industry to ensure Commanders and Warfighters have the tools available to defend our nation and save lives.

This article was featured in the 2020 PSC Annual Conference Thought Leadership Compendium. See the full PDF version here.